GREIS: Granular-Feedback Expanded Instrument System (2002-present)

GREIS (pronounced “grace”) is the Granular-Feedback Expanded Instrument System. my ever-evolving performance system and digital music instrument that is now 16 years in the making. GREIS is Created in Max/MSP – a collection of many custom modules from various sub-projects I’ve done over the years. The system focuses on sculpting and re-shaping recorded or live-captured sounds through spectral and textural transformations, largely performed with hand gestures on a Wacom tablet (right hand) while modulating the sound or the nature of the control/mapping in some way (left hand). The unit of a “grain” – which may be e.g. a temporal fragment, a single partial or a transient component – is dispersed to different processes and fed-back through the system. GREIS includes granular and spectral analysis/synthesis, complex mapping techniques and generative processes that surprise me with machine-based decisions, forcing me to react in the moment. The system is intended for the total flexibility of free improvisation, and I often play with acoustic musicians – sometimes using their sound as source material. In other projects and solo, I work with particular sets of recorded material, grouped according to their qualities and re-called in the moment of performance for sculpting and transformation. A more recent focus of this work is in designing ways to navigate large databases of sound files grouped by their sonic qualities, and re-call these in an improvised fashion. (A form of “timbre space” navigation). This manual approach to sculpting recorded sound is something I refer to as multidimensional turntablism. In more recent years, I have begun performing with voice, both as source material and also as another means to sculpt recorded sounds, using audio mosaicing techniques combined in a unique way with vocal-cross synthesis methods, as illustrated in the below diagram:

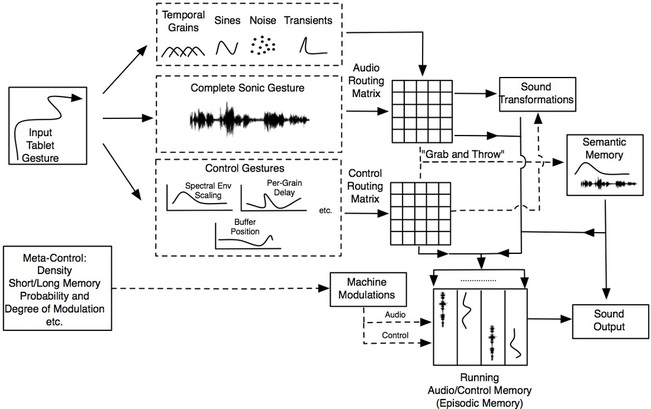

In performing with this system I am focused not only on improvised sonic sculpting using hands and voice, but also interacting with the immediate past in a way that is nonlinear and which presents new gestural modulations into the musical mix in order to create coherent sonic structures over time. This performance practice with GREIS thus leads to my being able to immediately shape sound objects in both dramatic and subtle ways, and to achieve a particular musical structure by building loops, textures and layers “by hand” in collaboration with the machine. Sonic gestures are thus a very essential part of my work, while theatrical gestures are not my central concern. I think of the system as a complex dynamical system that has a memory and hysteresis, like an acoustic instrument. From an interaction design point of view, I think in terms of metaphors when designing and performing with GREIS: sculpting, navigating but also “grabbing” and “throwing” sounds in performance. Below is a diagram that represents the internal memory and sonic gestural representations of the system:

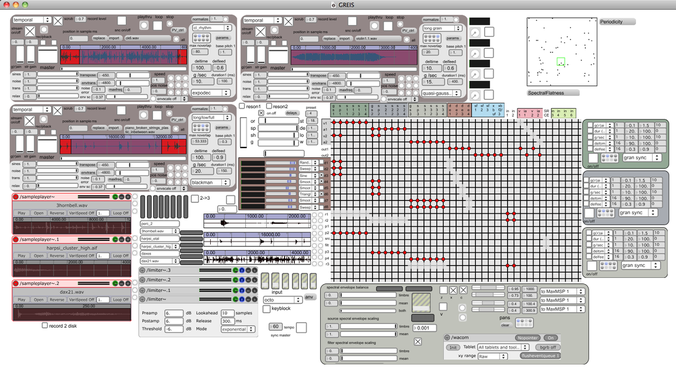

Meanwhile, here is a screen shot from one possible orientation of the GREIS software:

Sound examples of me performing with the system can be found on the Triple Point project page. More detailed information on the design of GREIS can be found in the following publication:

Doug Van Nort, Pauline Oliveros and Jonas Braasch, “Electro/Acoustic Improvisation and Deeply Listening Machines”, Journal of New Music Research, 42(4), pp. 303-324, December 2013.