A series of two pieces combining text/graphic scores and a custom gestural language, for large-scale ensemble in the telematic medium, created to mark my 20 year anniversary of telematic musicking.

The Original Program Notes for Dispersionology #1:

In the world of physics, dispersion describes a phenomenon in which the rate of propagation of a wave in a medium, its phase velocity, is dependent on its frequency. This can be seen in light, sound, gravity waves, etc. Its a property of telecommunications signals, including the pulses of light in optical fibre cables, describing how the signal broadens and spreads out as it moves across the channel. Dispersion therefore is inherent in the medium that more-and-more binds us these days, in the movements of light pulses that transports our attention, and our listening, around the globe. A beautiful consequence of dispersion is a change in the angle of refraction of different frequencies, leading to a prismatic opening up of a full colour spectrum from incoming light. This ability to broaden out as signals propagate through the network reflects a much wider expansion of distributed listening and sounding that is made possible in the context of telematic musicking. It occurred to me recently that, as of early 2023 I’ve engaged this medium now for 20 years, with an ear towards exploring the myriad ways that the shared real/virtual and nowhere/everywhere site of performance can act as both a point of convergence towards a singular locus of performative attention — yet also a dispersive prism, reflecting individual voices and the preservation of creative agencies of every performer.

I call this current exploration of this phenomenom, at this current milestone moment, “Dispersionology”…. I’ve invited a wide array of past telematic collaborators (spanning this entire 20 years) to explore this and other related tales with me on May 10th. I hope you can join us!

-Doug Van Nort

The concert for piece #1 took place on May 10th as Dispersionology and Other Tales with the following program:

Tuning Meditation

Composer: Pauline Oliveros

Performers: All Sites

Dispersionology

Composer: Doug Van Nort

Performers: All Sites

Other Tales: Algorithmic-chance-structured improvisation

Composer: Doug Van Nort

Performers: All Sites

Performers:

Dispersed/Various Locations:

Chris Anderson-Lundy, Saxophone, Toronto, ON

Tom Bickley, EWI + Max processing, Berkeley, CA

Anne Bourne, cello, Toronto, ON

Cássia Carrascoza Bomfim, flute, Brazil

Chris Chafe, celletto, CCRMA/Palo Alto, CA

Viv Corringham, voice, electronics, New York, NY

Bjorn Eriksson, analog electronics (feedback boxes), Solleftjea, Sweden

Colin James Gibson, guitar, Toronto, ON

Bill Gilliam, piano + electronics, Toronto, ON

Scot Gresham-Lancaster, electronics, Maine

Theodore Haber, violin, Montreal, QC

Glen Hall, Soprano saxophone, contrabass clarinet, Brampton, ON

Holland Hopson, banjo, Tuscaloosa, AL

Rory Hoy, bass + electronics, Brampton, ON

Kai Kubota-Enright, piano (+/- preparation), electronics, Montreal, QC

Al Margolis, violin/contact mic(s)/objects, Chester, NY

Scott L. Miller, Kyma, Minneapolis, MN

Emma Pope, piano, Montreal, QC

Ambrose Pottie, percussion and electronics, Castleton, ON

Dana Reason, piano/inside piano, Oregon State University, Corvallis, Oregon

Omar Shabbar, guitar + electronics, Toronto, ON

Kathy Kennedy, voice + electronics, Montreal, QC

Doug Van Nort, soundpainting, greis/electronics, voice, Toronto, ON

Sarah Weaver, conducting, New York, NY

Zovi, Theremin, Albany, NY

Bogotá (Universidad de Los Andes):

Ricardo Arias, balloons

Leonel Vásquez

(featuring U de Los Andes students)

Chicago (School of the Art Institute of Chicago):

Eric Leonardson, springboard + electronics

Garrett Johnson, electronics

Gordon Fung, electronics

Oslo (Universitetet i Oslo):

Krisin Norderval, voice

Fabian Stordalen, Bass guitar, Guitar, No-input mixing

Kristian Eicke, Guitar (percussive) on lap

Nino Jakeli, Vocals, Guitar, Keyboard

Aysima Baba, Accordion

Alexander Wastnidge, Guitars, Live Electronics

Emin Memis, Ney Flute, Drums

The immersive multi-channel performance – placing the performers at distinct points in the Dispersion Lab space – was recorded with a Zylia 6DOF ambisonic microphone array and is being prepared for future release as a virtual/immersive realization of the piece.

The premiere for piece #2 in this series took place in November 2023 as part of the 2023 NowNet Arts Conference/Festival.

Credits:

Composition and Direction:

Doug Van Nort

Performers – Dispersed/Various Locations:

Chris Anderson-Lundy, Saxophone, Toronto, ON

Tom Bickley, EWI + Max processing, Berkeley, CA

Anne Bourne, cello, Toronto, ON

Cássia Carrascoza Bomfim, flute, Brazil

Chris Chafe, celletto, CCRMA/Palo Alto, CA

Viv Corringham, voice, electronics, New York, NY

Bjorn Eriksson, analog electronics (feedback boxes), Solleftjea, Sweden

Colin James Gibson, guitar, Toronto, ON

Bill Gilliam, piano + electronics, Toronto, ON

Scot Gresham-Lancaster, electronics, Maine

Theodore Haber, violin, Montreal, QC

Glen Hall, Soprano saxophone, contrabass clarinet, Brampton, ON

Holland Hopson, banjo, Tuscaloosa, AL

Rory Hoy, bass + electronics, Brampton, ON

Kai Kubota-Enright, piano (+/- preparation), electronics, Montreal, QC

Al Margolis, violin/contact mic(s)/objects, Chester, NY

Scott L. Miller, Kyma, Minneapolis, MN

Emma Pope, piano, Montreal, QC

Ambrose Pottie, percussion and electronics, Castleton, ON

Dana Reason, piano/inside piano, Oregon State University, Corvallis, Oregon

Omar Shabbar, guitar + electronics, Toronto, ON

Kathy Kennedy, voice + electronics, Montreal, QC

Doug Van Nort, soundpainting, greis/electronics, voice, Toronto, ON

Zovi, Theremin, Albany, NY

Performers – Performance Nodes:

Chicago (School of the Art Institute of Chicago):

Eric Leonardson, springboard + electronics

Garrett Johnson, electronics

Gordon Fung, electronics

Oslo (Universitetet i Oslo):

Krisin Norderval, voice

Fabian Stordalen, Bass guitar, Guitar, No-input mixing

Kristian Eicke, Guitar (percussive) on lap

Nino Jakeli, Vocals, Guitar, Keyboard

Aysima Baba, Accordion

Alexander Wastnidge, Guitars, Live Electronics

Emin Memis, Ney Flute, Drums

Also Broadcasting from Chicago on Free Radio SAIC

In-person audience at Universitetet i Oslo (IMV Salen)

thee Doug Van Nort Electro-Acoustic Orchestra is an ensemble comprised of a mixture of acoustic and electronic performers. It is an emergent sonic organism that evolves through collective attention to all facets of sound, software instruments and text/graphic pieces created by Van Nort, and gesture-based real-time composition in combination with a growing repertoire of these pre-composed structures that are fluidly traversed and integrated spontaneously during performance. The gestural-compositional conducting language used with the group draws upon Van Nort’s experience in electroacoustic improvisation and its unique approach to sound sculpting, shared signal manipulation, the distinct relationships to time/memory/causality that electronics afford, and works that integrate improvisation with pre-composed structures or generative systems. The language is built upon Soundpainting, with many modifications and additions for this project.

This ensemble builds upon the larger Electro-Acoustic Orchestra project, as a more professional and established group which has now had a fixed membership over a longer time scale. This ensemble emerged from the pandemic, integrating select long-term members coming out of the course-based version of the EAO ensemble, with members of the international community of electro/acoustic improvisers (and notably, collaborators from the international Deep Listening community), via telematic connections. The ensemble meets regularly to work through new materials, gestures-concepts and pieces.

The course-based EAO often acts as a pathway to join the professional ensemble for students and professional performer alike. The professional DVN-EAO ensemble has converged to a much higher degree of refinement and specificity, with Van Nort able to compose more involved sonic structures (both electronics as well as text/graphic scores) that are rehearsed and can be called upon and nonlinearly combined in performance, as well as much more “two-way mind reading” that happens in the moment.

thee DVN-EAO regularly performs online and in-person (typically with mixed in-person/telematic performers), and invites proposals for venues and festival performances.

Upcoming and select recent performances include:

November 2023: Ongaku no tomo hall, Tokyo, Japan

December 2022: online/streaming performance for the Winter Solstice

October 2022: keynote performance for the exhibition/symposium Sensoria: The Arts and Science of Our Senses with audiences in Gdansk, Poland and at the Dispersion Lab, featuring immersive haptic/light piece by Van Nort.

May 2022: Elka Bong (Walter Wright and Al Margolis) and thee Doug Van Nort Electro-Acoustic Orchestra in first in-person concert at the Dispersion Lab since the pandemic.

December 2021: Works for the Winter Soltice – online/streaming concert.

June 2021: nO(t)pera Summer Soltice Selections – online/streaming concert.

December 2020: Quarantine: A Telematic nO(t)pera, large scale piece online/streaming concert with audience participation.

Credits:

Composition and Direction:

Doug Van Nort

thee Doug Van Nort Electro-Acoustic Orchestra:

Chris Anderson-Lundy (saxophone), Tom Bickley (EWI+electronics), Viv Corringham (voice+electronics ), Björn Eriksson (feedback boxes), Rory Hoy (bass+electronics), Kathy Kennedy (voice+electronics), Omar Shabbar (guitar+electronics), Danny Sheahan (violin+electronics), Doug Van Nort (soundpainting/composing/electronics).

Booking:

dispersion[dot]relation[at]gmail[dot]com

Quarantine: A Telematic nO(t)pera is a piece by Doug Van Nort, created for the Electro-Acoustic Orchestra (EAO), for the virtual space of connected isolation, for Casper the cat, and for self-sanity. It is not an Opera, but it is not not an Opera. It is a composition for musical, visual and virtual engagement. The music consists of six movements that span disparate sonic landscapes. It is organized by pre-composed palettes that integrate text, graphics, Soundpainting and software instruments, and are augmented with additional real-time composition via EAO’s unique Soundpainting conducting. This content is a crystallization of ideas that have emerged from months of regular online rehearsals that date back to the beginning of the pandemic, bringing together performers from three continents and numerous time zones. As a meditation on (and a product of) our network-mediated present, the nO(t)pera also introduces diverse networks of improvised collaboration: cross performer-machine collaboration, performer-animal collaboration and audience-machine-performer collaboration.

In one movement of the piece, the audience is invited to improvise drawing input that are interpreted by machine learning algorithms, and in turn will determine the overall structure and sonic content of the music.

Credits:

Composition and Direction:

Doug Van Nort

Electro-Acoustic Orchestra:

Tom Bickley (EWI+electronics), Lo Bil (voice), Viv Corringham (voice+electronics ), Björn Eriksson (feedback boxes), Faadhi Fauzi (synths), Colin James Gibson (guitar), Yuanfen Gu (notpera granular patch), Rory Hoy (bass+electronics), Melanie Jagmohan (guitar+legos), Kathy Kennedy (voice+electronics), Aida Khorsandi (notpera FM patch), Nicholas Lina (bass), Kieran Maraj (kin/electronics), Diane Roblin (inside piano/synths), Omar Shabbar (guitar+electronics), Danny Sheahan (violin+electronics), Peter Vukosavljevic (percussion), Doug Van Nort (conducting/composing).

Live action-or-lack-thereof:

Casper, the cat

Cat-herding and video work:

Stacy Denton

Virtual Staging and visuals:

Rory Hoy

Deep Machine Learning (conducting and drawing recognition):

Kieran Maraj

Origin8 was an interactive dance/media piece which utilized MYO sensing technology. This was a collaboration with Van Nort (music), Sinclair (visuals) and the National Ballet School of Canada (Shaun Amyot, choreographer). My work as composer centred around creating interactive music composition that was driven by the electrical muscle (myogram) and movement activity 21 dancers (hailing from 21 different countries) in collaboration with machine learning – a kind of biosensing and machine co-creation. The piece was created for the quadrennial Assembleé Internationale festival which brings together top Ballet schools and their select students internationally. In this work, the interactive music applied machine learning to the task of learning mappings between MYO sensing and sonic output. As part of the interactive musical composition, the overall musical structure emerged from the actions and movements of the dancers, so that each of the performances had an overarching form but differed in their details. There are four sections, and the (choreographic and musical) content from sections 1-3 are combined and layered in section 4. Below is a video taken during the dress rehearsal, just prior to the opening.

Credits:

Shaun Amyot (choreography)

Don Sinclair (interactive visuals)

Doug Van Nort (interactive music composition)

Additional Reference:

Doug Van Nort, “Gestural Metaphor and Emergent Human/Machine Agency in Two Contrasting Interactive Dance/Music Pieces”. Proceedings of the International Symposium of Electronic Arts (ISEA), 2020.

This project integrates two MYO muscle-sensing armbands, shared-signal sound processing of an electro-acoustic ensemble and gestural recognition of Soundpainting-style conducting. In the project, the Soundpainter shifts modes between guiding performers, collaborating through movement/sound improvisation, and explicitly processing the sonic output of performers through their movements. These shifting modes of interaction require all performers to become attentive to the tensions between acoustic and electronic sources, between their origination point (instrumentalist vs. Soundpainter) and between bottom-up structured improvisation and top-down guiding via conducting. These continuums are amplified and explored through another layer of shared articulation, as machine learning is applied to recognition of the composer/conductors gestures, with symbolic recognition opening up channels of electronic processing and discrete states of potential sound transformation, . The underlying machine learning system is also trained on continuous mappings between conducted motion and sonic transformations, allowing the Soundpainter to perform these transformations through their (now free and unconstrained) movements, continuously co-shaping the output with a given performer. The presence of these two distinct modes of machine-mediation create a tension between the symbology of conducted instruction and that of continuously co-constructed sound, with the Soundpainter and performer sharing signals and intentional resonance in performance. I diagrammed and mapped out this larger human/machine system of listening and co-creation, as can be seen in the below image gallery.

Intersubjective Soundings narrows in on this collective experience as a compositional parameter, allowing for moments of getting “lost” in one another’s sound world and gestural intentions, while needing to pull back to the symbology of soundpainting-based conducting. The work therefore traverses the spectrum of embodied listening-in-the-moment at one extreme, and a reflexive consideration of musical meaning at the other, with both of these modes being mirrored in the movement of the conducting language. This project has been developed in the context of the Electro-Acoustic Orchestra and was premiered at the 2017 International Conference on Movement and Computing (MOCO), with myself at Deptford Town Hall at Goldsmiths University in London, and the EAO at the DisPerSion Lab in Toronto. In this piece, the sense of “listening across” that occurs between instrumental performer and conductor/performer was further heightened through the introduction of a telematic connection between sites.

In 2019 I was invited to present work for a CBC sponsored festival called CRAM. In this context I applied this project to a new piece with another instantiation of EAO, in this case co-located in an acoustically-interesting silo space on York University’s campus known as Vari Hall. We played it twice that evening, and someone was kind enough to post a phone-recorded video of one of the sets.

Credits

Piece Creation/Direction: Doug Van Nort

EAO for the London performance was:

London: Doug Van Nort (composing/conducting, MYO-based transformations)

DisPerSion Lab: Dave Bandi (guitar), Chris Cerpnjak (cymbals, glockenspiel) , Glen Hall (saxophone), Ian Jarvis (catRT+supercollider) , Ian Macchiusi (Moog mother), Mackenzie Perrault (guitar), Danny Sheahan (keys, samples), Fae Sirois (violin), Lauren Wilson (flute)

EAO for the CRAM performance was:

Doug Van Nort (composing/conducting, MYO-based transformations)

Chris Anderson-Lundy (saxophone), Dave Bandi (guitar), Chris Cerpnjak (cymbals, glockenspiel) , Erin Corbett (analog synth), Glen Hall (saxophone), Rory Hoy (bass), Ian Jarvis (catRT+supercollider) , Kieran Maraj (electronics), Ian Macchiusi (Moog mother), Mackenzie Perrault (guitar), Danny Sheahan (violin+electronics), Fae Sirois (violin), Lauren Wilson (flute)

Additional Reference:

Doug Van Nort, Conducting the In-Between: Improvisation and Intersubjective Engagement in Soundpainted Electro/Acoustic Ensemble Performance, Digital Creativity, 29(1), 68-81, 2018.

Elemental Agency is a piece concerned with an emergent gestural language that manifests across movement, sound and light. Rather than sound/light articulating movement, or movement driving media, the approach builds outward from gestural metaphors as a point of shared intersection for these phenomena. The work draws upon the metaphorical constructs of Japanese Godai and Indian Vaastu Shastra theories of five elements: earth, water, wind, fire and void/space. Working with conceptual metaphors drawn from these traditions, constraints are provided to the dancers of embodying a given element as a collective – a texture of movement, rather than a singular human entity, that manifests the non-human agency of a given element. Motivations related to world, body, motion, emotion and enaction are given: earth as stubbornness and resistance to change, wind as expansive, elusive and compassionate, fire as energetic and forceful, etc. Using machine learning methods, the sonic interaction designers seek to capture moments of gestural expression and fuse this with sounds that similarly embody a given elemental quality/profile. Negotiation between art forms leads to a collective choreography and sound design that emerges from the embodiment of the nonhuman elements and the mediation of the machine agents. Visual projection functions as both lighting and enhancement of embodied experience in space, rather than screen-oriented media experience. Spotlights both enhance elemental qualities as well as embodying behaviours that align with the constraints of the element metaphors: rigid tracking of movement within earth state, amorphous following within water state, tendency towards consumption in fire state, etc.

The pieces moves through short movements of distinct elements: water swells and then recedes into earth, which tectonically shifts before dispersing to the wind, dying down to invoke fire. Gestures and movement are recognized and mapped to sound and light output. The collective texture of these elements then shifts to the individual gesturality of each dancer, who singularly choose to embody a given element: moving from the collective texture of mono-element to the collision and transformation between elements, passing between dancers. The pervasive aether of machine listening recognizes gestures captured throughout the four-elements sections and re-inject loops of these into the performance: this aether ever-listening to elements, and re-performing its understanding of each dancers elemental offerings. Increased density and destabilization of dancers’ elemental transformations lead to an ascendance into the void: pure energy, beyond the everyday, a distribution/suspension/expansion of presence and expression. Void is devoid of any object-hood or gestural points of reference.

The piece rests in void state during its installation period. If audience members interact (browser via laptop or mobile device) with the elemental portal, they may bring the piece back into the world of a given elemental state. The performance space then invites interaction from audience.

Credits:

Doug Van Nort: conception, direction, system composition, void state sound/interaction design

Vanessa Boutin, Holly Buckridge, Shaelynn Lobbezoo, Joshua Murphy, Paige Sayles, Marie-Victoria de Vera: emergent and collective choreography, dance

Yirui Fu: spotlight visual/behaviour design

Akeem Glasgow: kinect tracking and mapping to visuals

Rory Hoy: earth state sound/interaction design

Ian Jarvis: fire state sound/interaction design

Kieran Maraj: water, wind states sound/interaction design

Michael Palumbo: mobile audience interaction,network architecture programming

Mingxin Zhang: spotlight visual/behaviour design

Additional References:

Van Nort, D. “Gestural Metaphor and Emergent Human/Machine Agency in Two Contrasting Interactive Dance/Music Pieces”. Proceedings of the 2020 International Symposium of Electronic Arts (ISEA), 2020.

Jarvis, I., D. Van Nort. Posthuman Gesture, in Proc. of the International Conference on Movement and Computing (MOCO), 2018.

This piece engaged the performance space as a “total work” that included visuals and a sense of dramaturgy driven by the composition of time and interactivity – and thus it is a “nO(t)pera”, i.e. it is not an Opera but it is not-not an Opera. It is a semi-structured, semi-improvised performance linking five performers and their audience across one virtual and five real sites of performance. The musicians utilize their deep listening skills as they listen across networks, across N. America, and across radically different acoustic spaces and instrumentations, in order to find convergence through musical dialogue. Performers from Stanford (California), RPI (New York) and York Special Projects Gallery were projected onto materials within the DisPerSion Lab, forming an uncanny trace of their bodily presence, embedded on a blended “stage” with a live electronics performer. Public activity from the hallway of the neighbouring building on York’s campus, leading up to the Special Projects gallery where Bourne was performing, was mapped into visual and sonic art and projected within DisPerSion Lab, creating a texturized “double” of the activity just outside the Gallery site of performance. The Dispersion Lab website provided another realization of the performance, allowing audience to chat, interact via twitter, listen to individual streams or the entire mix, and alter the outcome by conducting the musicians using a web-based interface during one section of the piece. The York-based audience was invited to wander between the differing performative realities of the public spaces, the virtual online platform, the live performance within the gallery, and the live performance within DisPerSion lab. The overarching composition and design vision for the piece was an uncanny sense of being/not-being “there”, as one moved between local sites while hearing the sounds of other neighbouring performance locations in the distance. Students from Van Nort’s “Performing Telepresence” course contributed visual design work for the staging, theatre lights and projection, as well as programming and administrative work

Conception/Composition/Direction:

Doug Van Nort

Performers:

Anne Bourne (cello) – York Special Projects Gallery, York University Toronto, ON

Chris Chafe (celletto) – CCRMA, Stanford University, Palo Alto, California

Pauline Oliveros (V-Accordion), Jonas Braasch (Soprano Saxophone) – CRAIVE Lab, Rensselaer Polytechnic Institute, Troy, NY

Doug Van Nort (greis/electronics) – DisPerSion Lab, York University, Toronto, ON

Press:

Scenography/Visual and Lights/Technical Production/Promotions

Gale Cabiles – promotional video and graphics

Kevin Feliciano – audio networking and DisPerSion Lab sound engineering

Akeem Glasgow – public, interactive sonic instrument

Radi Hilaneh – video processing and in-York networking

Justin Hsieh – Gallery design and layout

Raechel Kula – DisPerSion Lab projection surfaces and visual design

Rory Hoy – network mapping design

Candy Hua – DisPerSion Lab

Tony Liu – remote conducting of performance sites

Sam Noto – visual streaming to virtual performance site

Sarah Siddiqui – website audio remixing/streaming

Keren Xu – promotional video and graphics

Yirui Fu – network lighting control, sound-to-light mapping DisPerSion Lab

Mingxin Zhang – lighting effects, DisPerSion Lab

Cary Zheng – video networking between sites, documentation

Keke Zhou – public, interactive visual instrument

This was a collaboration with York University Associate Librarian William Denton and the students of my “Performing Telepresence” course. Denton created a real-time sonification of the library’s reference desk activity, called STAPLR (Sounds in Time Actively Performing Library Reference). Equal parts installation, sonification, and performance, the STAPLR Dispersion piece created a performative conversation with the library space, influencing the data streams and drawing attention to the practices and rituals of the library. We created sound and light instruments to receive the library data, which defined an immersive space within the DisPerSion Lab that spatialized the sound and light based on the library branch that the data was coming from. Twitter was scraped for hashtags and keywords, which modified the results and were displayed as part of the piece. Laptop stations showing the stream from the lab, the sonification stream and the Twitter comments were placed at five different library branches and students embedded themselves (quietly!) with headphones at each station. Library patrons were made aware of the ongoing activity and encouraged to join in and influence the piece either by tweeting or by engaging the reference desk, thereby altering the sonified data and engaging the public in a performative interaction with the library space. Various students and librarians moved between libraries and the immersive lab space, creating a meditative engagement with public space, data, archives and performative practices in everyday life.

Credits

Conception and Direction:

Doug Van Nort

Library Data Sonification:

William Denton

Sound, Light and Text Instrument Design:

Doug Van Nort and students from the “Performing Telepresence” course: Gale Cabiles, Kevin Feliciano, Floria Fu, Akeem Glasgow, Radi Hilaneh, Rory Hoy, Justin Hsieh, Candy Hua, Raechel Kula, Sam Noto, Sarah Siddiqui, Keren Xu, Carey Zheng, Mingxin Zhang, Keke Zhou, Tongliang Liu)

Further Reading

Doug Van Nort, “Distributed Networks of Listening and Sounding: 20 Years of Telematic Musicking”, Journal of Network Music and Arts 5 (1), 6, 2023.

This piece intersects the open airwaves nature of transmission arts with the distributed potential of telematic music performance.

It further plays with a changing, collaborative performance topology which moves beween moments when individuals (both in the room and potentially around the globe) influence the piece as it unfolds (a many to one relationship), while at others I am able to ping local mobile devices which then act as small speakers/spatializers of sonic content (a one to many relationship).

The structure of the work is emergent, and is driven by an evolutionary algorithm. The audience helps to guide this emergence (i.e. collective modulation of a networked system), while I provide the piece with an overall shape, spectral content, texture and spatial character.

Thus a tension exists between open, democratic creative practices such as can be found in “computer network music” or laptop ensemble paradigms, and an electroacoustic improvisation paradigm where sonic content can be refined and directed musically, richly working with the performance space to create an immersive experience.

This piece is the third in a series of digital music compositions by Doug Van Nort that explore distributed creativity and collective content evolution. The first was a piece (On-to-genesis) written for the Composers Inside Electronics and performed at Roulette in 2012, exploring collective evolution of a genetic algorithm, the second (discursive/dispersive) was written for the MICE ensemble, performed at the University of Virginia’s Zerospace festival, which explored remote conducting and control of a digital ensemble at a distance. This piece evolves this conceptual territory to explore an open transmission paradigm and the blurring of audience/performer boundaries.

The piece was premiered at the New Adventures in Sound Art’s TransX Transmission Arts Festival in May 2015.

Triple Point – Pauline Oliveros, Doug Van Nort, Jonas Braasch – was an improvising trio whose core instrumentation was soprano saxophone, greis/electronics and V-accordion. The name refers to the point of equilibrium on a phase plot, which acted as metaphor for our improvisational dialogue. Our musical interaction was centered around an interplay between acoustics, physically-modeled acoustics (v-accordion) and electronics. Van Nort captured the sound of the other players on-the-fly, either transforming these in the moment to create blended textures or new sonic gestures, or holding them for return in the near future. Oliveros changed between timbres and “bended” the intended factory sound models through her idiosyncratic use of the virtual instrument, while Braasch explored extended techniques including long circular-breathing tones and multiphonics. This mode of interaction has resulted in situations where acoustic/electronic sources are indistinguishable without very careful listening, while others times this becomes wildly apparent. This continual, fluid morphing is a product of Deep Listening and living in the moment.

“phase/transitions”, a 3-CD set of unedited live improvisation ranging from 2008-2012, was released by Pogus Productions in September 2014. The release features Chris Chafe as a special guest on six tracks.

The trio collaborated on the composition/improvisation project “Quartet for the end of Space” with Francisco López in 2010/2011, also available on Pogus.

In 2009, Triple Point released “Sound Shadows” on the Deep Listening label, which documents a quartet improvisation with Stuart Dempster.

The trio performed together countless time since their inception in 2008, in a variety of festivals and galleries, over the internet with the trio dispersed around the globe, and in experimental exploration of electroacoustic technologies such as machine improvising partners (Van Nort’s FILTER system), automated conducting systems and immersive spatial sound environments based on acoustic modeling of unique spaces.

Triple Point Was:

Pauline Oliveros – accordion/v-accordion

Doug Van Nort – greis/electronics, voice

Jonas Braasch – soprano saxophone

Further Reading:

Doug Van Nort, Multidimensional scratching, sound shaping and Triple Point. Leonardo Music Journal, vol. 20, December 2010.

Created for the Summer Solstice 2014 at Socrates Sculpture Park in Queens, NY and commissioned by Norte Maar. This piece involved the placement of hydrophones in the east river, and a process of improvisational auscultation of the underwater environment and transformation of the sound discovered on this longest day of the year.

This piece was described well (aside from fact these were live audio streams and not recordings!) in 2014 by Hyperallergic:

“Doug Van Nort presented an improvised performance based on audio recordings taken from the East River, on which the park is settled. The improvisation shifted between murky drones and deep earthly noise, as well as the occasional rhythmic popping. The sounds were entirely derived from signals transmitted from hydrophonic microphones placed in the river, displacing and relocating the unheard sounds of the aquatic environment above the surface.”

in collaboration with Heidi Boisvert and an international team of artists, [radical] Signs of Life is a large-scale biophysical dance show.

Through responsive dance, the piece externalizes the mind’s non-hierarchical distribution of thought. Media is generated from dancers’ muscles & blood flow via biophysical sensors that capture soundwaves from the performers’ muscular tissue. The choreography for the hour-long performance is composed in real-time by five dancers from a shared movement database in accordance with pre-determined rules based on principles of self-organizing systems. Outfitted with two wireless sensors each, the dancers–Jennifer Mellor, Ellen Smith Ahern, Hanna Satterlee, Avi Waring and Willow Wonder–create patterns that dissolve from autonomous polyrhythms to intersecting lines as they slip through generative video and light. Van Nort improvises original multi-channel electroacoustic music live in collaboration with new interactive dancer/sound instruments that he has created (using Xth sense technology), to sculpt a dense web of complex texture and emotion around the audience.

Regarding my interactive composition for this project:

All sound is generated from the dancers’ muscles. During performance, the qualities and patterns of the sonic gestural output from each dancer are learned/remembered by an interactive system. Near the conclusion, the stage lighting goes down and I improvise with this material towards an ending to the hour-long piece.

[radical] premiered at EMPAC (NY), the Experimental Media and Performing Arts Center in May 2013 through generous support from the Arts Department at Renssalaer Polytechnic Institute along with iEAR Studios. Rehearsal space has been granted through an Artist Residency at the Contemporary Dance & Fitness Center in Montpelier, VT. The project was realised thanks to the Creativity + Technology = Enterprise grant, awarded by Harvestworks through funds from the Rockerfeller Foundation’s New York City Cultural Innovation Fund and the National Endowment for the Arts.

Credits:

Director & Producer // Heidi Boisvert

Choreographer // Pauline Jennings

Music/Sound Designer // Doug Van Nort

Visual Designer // Raven Kwok

Lighting & Set Designer // Allen Hahn

Costume Designer // Amy Nielson

Xth Sense Creator // Marco Donnarumma

Wireless Network Engineer // MJ Caselden

Industrial Designer // Krystal Persaud

Set Fabricator // John Umphlett

Dancers // Jennifer Mellor, Ellen Smith Ahern, Hanna Satterlee, Avi Waring & Willow Wonder

Additional Reference:

Van Nort, D. [radical] signals from life: from muscle sensing to embodied machine listening/learning within a large-scale performance piece, in Proceedings of the 2nd International Workshop on Movement and Computing (MOCO), ACM, 2015.

This is a piece for networked laptop ensemble, and was commissioned for the festival Zerospace: an interdisciplinary initiative on distance and interaction at the University of Virginia, organized by Matthew Burtner.

It was created for the M.I.C.E. laptop ensemble at UVA. For the performance, I “conducted” the piece telematically from the Experimental Media and Performing Arts Center (EMPAC) in Troy, NY, with performers from the MICE ensemble (and the audience) located at the University of Virginia in Charlottesville.

The piece is built around a granular synthesis software instrument that includes a genetic algorithm to evolve sound forms, a set of specific samples, and a score to guide our performance. My conducting includes remote control of higher level granular qualities for the group, as well as text/colour instructions for full ensemble or sub-group, sent via OSC. Each performer had control over mixing up to six different granular voice, as well as control over the GA evolution of their own sonic “gene pool” of sounds.

Below are snippets from the pdf score, my conducting window, the performer instruments, and a reaction from one of the ensemble members, from our rehearsals leading up to the performance.

Credits:

Composition:

Doug Van Nort

Performers:

M.I.C.E. Ensemble 2013 (dir. Matthew Burtner)

Festival Organization:

Matthew Burtner, Sarah O’Halloran

I wrote this piece for the Composers Inside Electronics (CIE), premiered at Roulette on October 2nd, 2012. The performers were John Driscoll, Tom Hamilton and Doug Van Nort. The piece is for an ensemble of laptops that control the evolution of a genetic algorithm, which in turn shapes many layers of sonic textures and gestures that fly about the room – and in this case across the venue’s eight-channel sound system. An additional player is a machine entity that remembers the sequence of sonic actions throughout the performance, recalling certain sections at particular moments of the piece and recombining this past sonic memory while spatializing this throughout the room. In this way the piece strikes a balance between the immediacy of the evolving group decisions and a larger structure for the piece.

As I often like to do, for On-to-genesis I composed both a software instrument and a score. The instrument contains the GA process, a series of granular synthesis sound modules and presets that constrain possible sound materials and saved sonic states. I like this approach as it allows me to provide compositional constraints (e.g. begin from preset X in section Y) yet maintains a focus on listening that turns a lot of improvisational control over to the performer. Part of the performer’s control is that they define what is the most “fit” state of the system, which biases the evolution of the algorithm and in turn the internal sonic structure that emerges from this. You might say that I provide a larger form and constraints on the process, and the internal structure emerges from within this. The following is a video that shows the instrument in action. If you would like to see a longer video that demonstrates how the patch/algorithm works it can be found here.

The result from the premiere was an immersive experience that sounded quite good in the hall. The following is a recording of the final 9 minutes of this 20 minute composition. You can hear some of the spatial motion; imagine yourself in a beautiful theatre surrounded by an 8.2 channel sound system.

The piece is scalable to more performers. As with most of my pieces created for laptop ensembles, while it is written for good listeners with sonic/musical sensitivity, it does not presuppose any musical training.

A laptop ensemble composition written for and performed by Tintinnabulate at the Arts Center of the Capital Region in Troy, NY. This piece brings together signal-sharing of group tempo and musical key (similar to Awakenings), circuit-bend electronics and also introduced a “human cyborg” performer who could be instructed by audience in another space within the building to engage (or even interference) in the performance, to dance, etc. Audience members were also allowed to come up to a computer located in the performance space running the software instrument, becoming a member of the ensemble. In this way, the piece played with a hyper-localized sense of expanded presence, with breaking down the “fourth wall” of audience-performer engagement, with shared influence of musical structure, as well as hand gestures and a text and graphic-based score, acting as a centering principle for performance actions.

Credits:

Composition and Conception:

Doug Van Nort

Location:

Arts Center of the Capital Region, Troy, NY

Credits:

Performers:

Tintinnabulate (Pauline Oliveros, Doug Van Nort, Jonas Braasch, Evan Gonzalez, Zovi McIntee, Sean)

Audience Participation

Human Robot: Joshua Shinavier

Paralleling my series of Genetic Orchestra pieces, I created two ensemble pieces for acoustic instruments and electronics. The idea was that each sonic gesture is a meme that enters into a system and whose general gestural character evolves over generations, “cross-breeding” with the sounds of fellow performers.

Memetic Orchestra #1 was written for Triple Point and an early version of the FILTER system. It was premiered at the New York City Electroacoustic Music Festival in April 2009. The work explores the use of timbral/textural analysis, gesture recognition and evolutionary algorithms. The sound qualities of the sax and accordion are analyzed and the ‘sonic gestures’ are recognized on the fly by an artificial ‘agent’ who then steers a genetic algorithm, influencing Van Nort’s software performance system. Therefore, acoustic performers influence an agent, who in turn influences the software system that is being performed by a laptop performer who influences the listening of the agent and all are influenced by the overall sound output.

In the first few minutes of this piece the agent controls the sound processing with minor human tuning, and then over the final 7-8 minutes the laptop performer first plays in concert with the agent before taking over to play the GREIS system as well as the evolutionary process itself.

While the piece does explore complex systems and some heavy algorithms (the power of two laptops were needed to run all software), at its heart it is really about listening for all three performers – to the electronic/acoustic balance, to the role of the agent, to the intentions past and present, to the evolutionary trajectory, and so on.

Memetic Orchestra #2 was written for an ensemble of instruments and cross-synthesis algorithms. It was invited for a performance at the Flea Theatre’s “Music with a View” series in November 2009. An exploration in textural transformation and timbral sustain, the piece extracts audio qualities from each player to provide a form within which players articulate subtle inflections, their timbres merging to form a sometimes sparse, sometimes dense collage of each sound’s internal matter.

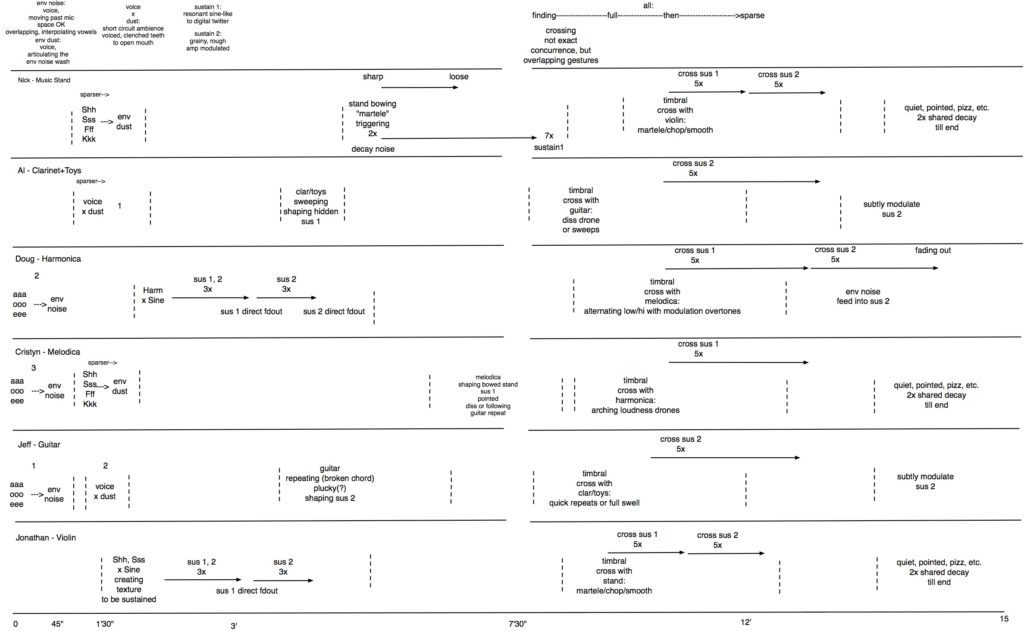

Graphic/Timeline Component of Score for Memetic Orchestra #2:

Performers for this premiere included:

Van Nort, harmonica

NF Chase, Bowed Music Stand

Jonathan Chen, Violin

Cristyn Magnus, Melodica

Al Margolis, Clarinet and Toys

Jefferson Pitcher, Electric Guitar

The basic premise of these “laptop orchestra” pieces is that participants somehow rate sound files and more “fit” members of the population are chosen for “mutation” (sound alteration) and “mating” (juxtaposition or morphing of sonic material). Using this genetic algorithm process I have developed a scheme in which performers-turned-sound-mutators rate files which are then selected through an algorithmic process based on the cumulative fitness ratings. In the set of works this evolutionary paradigm is directed by compositional rules, including the nature of the beginning “gene pool” and the type of mutation/crossover. At the same time, the process directs the structure of the score itself, determining the number of sections, number of players in this section, which sonic “generation” each player can draw from and so on. Within these confines, the performance is improvisatory, drawing on each epoch of the evolved sonic gene pool. In this way, there are actually two pieces to speak of: the first is the process and experience of creating this pool — where the participants experience their own sounds and mutations interacting with other players over time. This is an anonymous process where identities and dialogues are formed completely through the digital media. The second piece is the performance and score, when this entire multiple day-or-week process of the pool’s unfolding is collapsed into 20-40 minutes of a performance. Each performer at this point has an intimate understanding of the material, and modulates this pool in attempt to convey this process to a new audience. These series of pieces are collectively titled “Genetic Orchestras”, generally being named “X Genetic Orchestra” where X is the name of the ensemble. There currently exist seven pieces in the repertoire, which have been performed on at least eight occasions, including the International Society of Improvised Music conference in 2007, in performance with the Florida Electronic Arts Ensemble (FLEA) directed by composer Paula Matthusen at several festivals in the Miami area, and by students groups at universities including RPI.

The very first piece was commissioned by the Deep Listening Institute for the “Deep Listening Convergence” event in June of 2007, which brought together 40+ musicians over the internet in a six-month long “virtual residency”, before the final convergence of performances. As such this version is titled “Deep Listening Convergence Genetic Orchestra” or, DLCGO. This version was special not only because it was the first, but because we evolved the piece completely over the internet, and over such a long time period. The recording of the premiere performance was presented on the CD compilation “Listening for Music Through Community” released with the 2009 Leonardo Music Journal. Here is a recording excerpt from DCLGO.

Many version have been performed, such this one in the lobby of EMPAC:

The Toneburst Ensemble at Wesleyan (directed by Paula Matthusen) performed a version that I named ToneGO at the SHARE NYC event in Brooklyn. This is the first piece to include the GA process applied to video – with some really striking results.

For this series of pieces, I’ve created a simple piece of software that was designed with the intention of allowing players to loop and mix the sound files while independently changing the pitch or timbre. This also allows players to tap in a tempo and to change the character of the sound in simple but uniform ways. This application has become a central part of the pieces, allowing me to compose for the software instrument as well as for the process.

This piece resulted from a collaboration with the Topological Media Lab (TML) and XS lab of Concordia University. Sonic Tapestry is the composition and sound/interaction design by Doug Van Nort for the instrument known simply as the Tapestry (or Sensate Tapestry), created in the context of the WYSIWYG project, with Electronics by David Gauthier and Elliot Sinyor (TML) and tapestry weaving by Marguerite Bromley (XS).

Sensate Tapestry is a 20′ x 6′ ornate fabric created from conductive thread, which senses human proximity and touch. For this piece, Van Nort exploited the very volatile and nonlinear nature of the design in order to compose an interaction that changed depending on the nature of one’s touch, where gestures such as bunching, rubbing and lightly stroking all led to different sound worlds. The energy and number of inter-actors also defined the complexity of the sound evolution over time.

Below is a video that demonstrates the responsiveness of the piece and the reaction to different gestures, and then moves on to a situation in which the presence of more hands direct the piece into a different state, wherein the tapestry exhibits more life and complexity

This piece was first shown at the Remedios Terrarium gallery show (2007) at Concordia University, and later at the International Computer Music Conference (2009).